08 - Common problems & bad data situations¶

In this notebook, we will revise common problems that might come up when dealing with real-world data.

Maintainers: [@thempel](https://github.com/thempel), [@cwehmeyer](https://github.com/cwehmeyer), [@marscher](https://github.com/marscher), [@psolsson](https://github.com/psolsson)

Remember: - to run the currently highlighted cell, hold ⇧ Shift and press ⏎ Enter; - to get help for a specific function, place the cursor within the function’s brackets, hold ⇧ Shift, and press ⇥ Tab; - you can find the full documentation at PyEMMA.org.

Most problems in Markov modeling of MD data arise from bad sampling combined with a poor discretization. For estimating a Markov model, it is required to have a connected data set, i.e., we must have observed each process we want to describe in both directions. PyEMMA checks if this requirement is fulfilled but, however, in certain situations this might be less obvious.

[1]:

%matplotlib inline

import matplotlib.pyplot as plt

import numpy as np

import mdshare

import pyemma

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/mdshare/repository.py:53: YAMLLoadWarning: calling yaml.load() without Loader=... is deprecated, as the default Loader is unsafe. Please read https://msg.pyyaml.org/load for full details.

data = load(fh)

Case 1: preprocessed, two-dimensional data (toy model)¶

well-sampled double-well potential¶

Let’s again have a look at the double-well potential. Since we are only interested in the problematic situations here, we will simplify our data a bit and work with a 1D projection.

[2]:

file = mdshare.fetch('hmm-doublewell-2d-100k.npz', working_directory='data')

with np.load(file) as fh:

data = [fh['trajectory'][:, 1]]

Since this particular example is simple enough, we can define a plotting function that combines histograms with trajectory data:

[3]:

def plot_1D_histogram_trajectories(data, cluster=None, max_traj_length=200, ax=None):

if ax is None:

fig, ax = plt.subplots()

for n, _traj in enumerate(data):

ax.hist(_traj, bins=30, alpha=.33, density=True, color='C{}'.format(n));

ylims = ax.get_ylim()

xlims = ax.get_xlim()

for n, _traj in enumerate(data):

ax.plot(

_traj[:min(len(_traj), max_traj_length)],

np.linspace(*ylims, min(len(_traj), max_traj_length)),

alpha=0.6, color='C{}'.format(n), label='traj {}'.format(n))

if cluster is not None:

ax.plot(

cluster.clustercenters[cluster.dtrajs[n][:min(len(_traj), max_traj_length)], 0],

np.linspace(*ylims, min(len(_traj), max_traj_length)),

'.-', alpha=.6, label='dtraj {}'.format(n), linewidth=.3)

ax.annotate(

'', xy=(0.8500001 * xlims[1], 0.7 * ylims[1]), xytext=(0.85 * xlims[1], 0.3 * ylims[1]),

arrowprops=dict(fc='C0', ec='None', alpha=0.6, width=2))

ax.text(0.86 * xlims[1], 0.5 * ylims[1], '$x(time)$', ha='left', va='center', rotation=90)

ax.set_xlabel('TICA coordinate')

ax.set_ylabel('histogram counts & trajectory time')

ax.legend(loc=2)

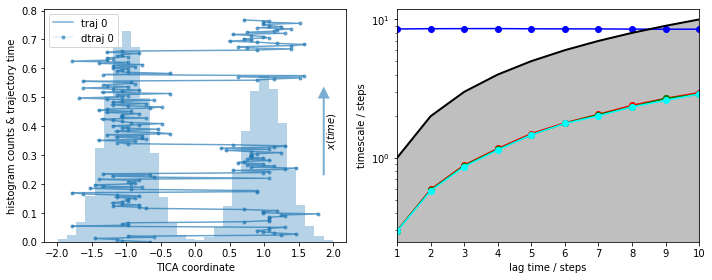

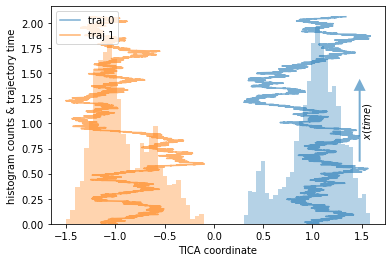

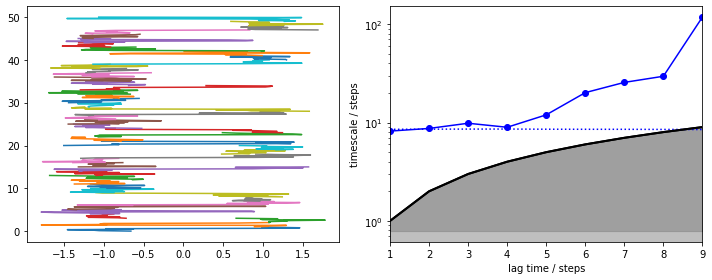

As a reference, we visualize the histogram of this well-sampled trajectory along with the first \(200\) steps (left panel) and the MSM implied timescales (right panel):

[4]:

fig, axes = plt.subplots(1, 2, figsize=(10, 4))

cluster = pyemma.coordinates.cluster_regspace(data, dmin=0.05)

plot_1D_histogram_trajectories(data, cluster=cluster, ax=axes[0])

lags = [i + 1 for i in range(10)]

its = pyemma.msm.its(cluster.dtrajs, lags=lags)

pyemma.plots.plot_implied_timescales(its, marker='o', ax=axes[1], nits=4)

fig.tight_layout()

We see a nice, reversibly connected trajectory. That means we have sampled transitions between the basins in both directions that are correctly resolved by the discretization. As we see from the almost perfect overlay of discrete and continuous trajectory, nearly no discretization error is made.

irreversibly connected double-well trajectories¶

In MD simulations, we often face the problem that a process is sampled only in one direction. For example, consider protein-protein binding. The unbinding might take on the order of seconds to minutes and is thus difficult to sample. We will have a look what happens with the MSM in this case.

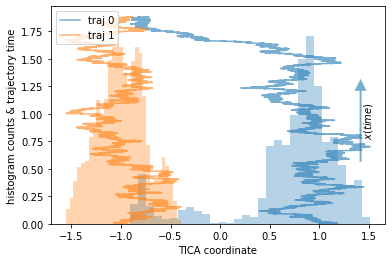

Our example are two trajectories sampled from a double-well potential, each started in a different basin. They will be color coded.

[5]:

file = mdshare.fetch('doublewell_oneway.npy', working_directory='data')

data = [trj for trj in np.load(file)]

plot_1D_histogram_trajectories(data, max_traj_length=data[0].shape[0])

We note that the orange trajectory does not leave its potential well while the blue trajectory does overcome the barrier exactly once.

⚠️ Even though we have sampled one direction of the process, we do not sample the way out of one of the potential wells, thus effectively finding a sink state in our data.

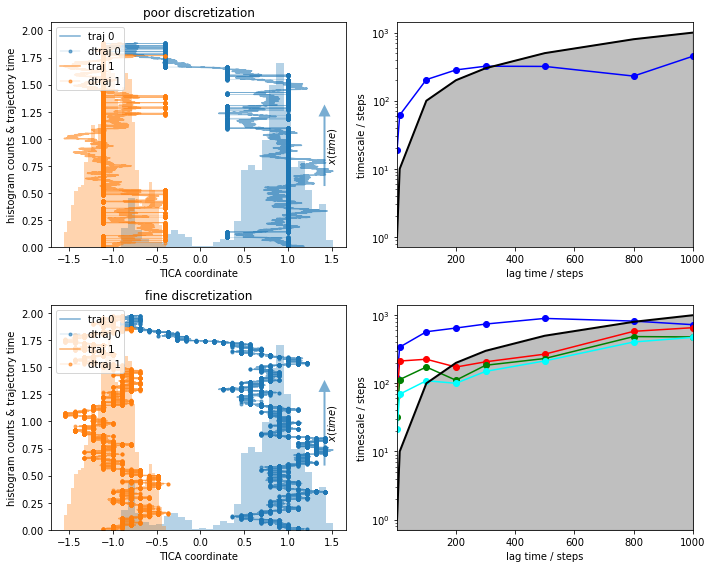

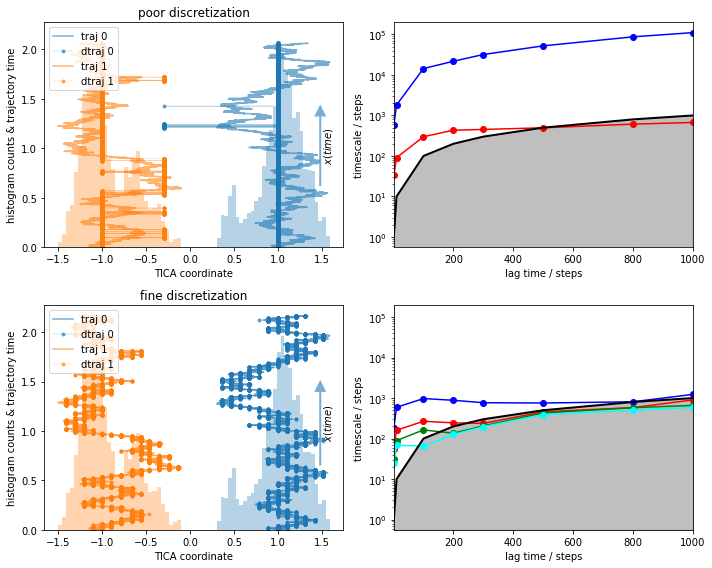

Let’s have a look at the MSM. Since in higher dimensions, we often face the problem of poor discretization, we will simulate this situation by using too few cluster centers.

[6]:

cluster_fine = pyemma.coordinates.cluster_regspace(data, dmin=0.1)

cluster_poor = pyemma.coordinates.cluster_regspace(data, dmin=0.7)

print(cluster_fine.n_clusters, cluster_poor.n_clusters)

29 4

[7]:

fig, axes = plt.subplots(2, 2, figsize=(10, 8), sharey='col')

for cluster, ax in zip([cluster_poor, cluster_fine], axes):

plot_1D_histogram_trajectories(data, cluster=cluster, max_traj_length=data[0].shape[0], ax=ax[0])

its = pyemma.msm.its(cluster.dtrajs, lags=[1, 10, 100, 200, 300, 500, 800, 1000])

pyemma.plots.plot_implied_timescales(its, marker='o', ax=ax[1], nits=4)

axes[0, 0].set_title('poor discretization')

axes[1, 0].set_title('fine discretization')

fig.tight_layout()

09-12-20 14:49:11 pyemma.msm.estimators.implied_timescales.ImpliedTimescales[10] WARNING Some timescales could not be computed. Timescales array is smaller than expected or contains NaNs

WARNING:pyemma.msm.estimators.implied_timescales.ImpliedTimescales[10]:Some timescales could not be computed. Timescales array is smaller than expected or contains NaNs

What do we see?¶

We observe implied timescales that even look converged in the fine discretization case.

With poor clustering, the process cannot be resolved any more, i.e., the ITS does not convergence before the lag time exceeds the implied time scale.

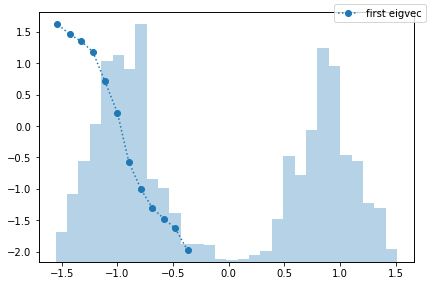

The obvious question is, what is the process that can be observed in the fine discretization case? PyEMMA checks for disconnectivity and thus should not find the process between the two wells. We follow this question by taking a look at the first eigenvector, which corresponds to that process.

[8]:

msm = pyemma.msm.estimate_markov_model(cluster_fine.dtrajs, 200)

fig, ax = plt.subplots()

ax.plot(

cluster_fine.clustercenters[msm.active_set, 0],

msm.eigenvectors_right()[:, 1],

'o:',

label='first eigvec')

tx = ax.twinx()

tx.hist(np.concatenate(data), bins=30, alpha=0.33)

tx.set_yticklabels([])

tx.set_yticks([])

fig.legend()

fig.tight_layout()

We observe a process which is entirely taking place in the left potential well. How come? PyEMMA estimates MSMs only on the largest connected set because they are only defined on connected sets. In this particular example, the largest connected set is the microstates in the left potential well. That means that we find a transition between the right and the left side of this well. This is not wrong, it might just be non-informative or even irrelevant.

The set of microstates which is used for the MSM estimation is stored in the MSM object msm and can be retrieved via .active_set.

[9]:

print('Active set: {}'.format(msm.active_set))

print('Active state fraction: {:.2}'.format(msm.active_state_fraction))

Active set: [17 18 19 20 21 22 23 24 25 26 27 28]

Active state fraction: 0.41

In this example we clearly see that some states are missing.

disconnected double-well trajectories with cross-overs¶

This example covers the worst-case scenario. We have two trajectories that live in two separated wells and never transition to the other one. Due to a very bad clustering, we believe that the data is connected. This can happen if we cluster a large dataset in very high dimensions where it is especially difficult to debug.

[10]:

file = mdshare.fetch('doublewell_disconnected.npy', working_directory='data')

data = [trj for trj in np.load(file)]

plot_1D_histogram_trajectories(data, max_traj_length=data[0].shape[0])

We, again, compare a reasonable to a deliberately poor discretization:

[11]:

cluster_fine = pyemma.coordinates.cluster_regspace(data, dmin=0.1)

cluster_poor = pyemma.coordinates.cluster_regspace(data, dmin=0.7)

print(cluster_fine.n_clusters, cluster_poor.n_clusters)

25 3

[12]:

fig, axes = plt.subplots(2, 2, figsize=(10, 8), sharey='col')

for cluster, ax in zip([cluster_poor, cluster_fine], axes):

plot_1D_histogram_trajectories(data, cluster=cluster, max_traj_length=data[0].shape[0], ax=ax[0])

its = pyemma.msm.its(cluster.dtrajs, lags=[1, 10, 100, 200, 300, 500, 800, 1000])

pyemma.plots.plot_implied_timescales(its, marker='o', ax=ax[1], nits=4)

axes[0, 0].set_title('poor discretization')

axes[1, 0].set_title('fine discretization')

fig.tight_layout()

What do we see?¶

With the fine discretization, we observe some timescales that are converged. These are most probably processes within one of the wells, similar to the ones we saw before.

The poor discretization induces a large error and describes artificial short visits to the other basin.

The timescales in the poor discretization are much higher but not converged.

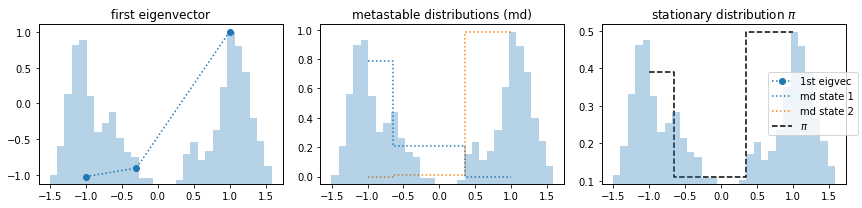

The reason for the high timescales in 3) are in fact the artificial cross-over events created by the poor discretization. This process was not actually sampled and is an artifact of bad clustering. Let’s look at it in more detail and see what happens if we estimate an MSM and even compute metastable states with PCCA++.

[13]:

msm = pyemma.msm.estimate_markov_model(cluster_poor.dtrajs, 200)

nstates = 2

msm.pcca(nstates)

index_order = np.argsort(cluster_poor.clustercenters[:, 0])

fig, axes = plt.subplots(1, 3, figsize=(12, 3))

axes[0].plot(

cluster_poor.clustercenters[index_order, 0],

msm.eigenvectors_right()[index_order, 1],

'o:',

label='1st eigvec')

axes[0].set_title('first eigenvector')

for n, metastable_distribution in enumerate(msm.metastable_distributions):

axes[1].step(

cluster_poor.clustercenters[index_order, 0],

metastable_distribution[index_order],

':',

label='md state {}'.format(n + 1),

where='mid')

axes[1].set_title('metastable distributions (md)')

axes[2].step(

cluster_poor.clustercenters[index_order, 0],

msm.pi[index_order],

'k--',

label='$\pi$',

where='mid')

axes[2].set_title('stationary distribution $\pi$')

for ax in axes:

tx = ax.twinx()

tx.hist(np.concatenate(data), bins=30, alpha=0.33)

tx.set_yticklabels([])

tx.set_yticks([])

fig.legend(loc=7)

fig.tight_layout()

We observe that the first eigenvector represents a process that does not exist, i.e., is an artifact. Nevertheless, the PCCA++ algorithm can separate metastable states in a way we would expect. It finds the two disconnected states. However, the stationary distribution yields arbitrary results.

How to detect disconnectivity?¶

Generally, hidden Markov models (HMMs) are much more reliable because they come with an additional layer of hidden states. Cross-over events are thus unlikely to be counted as “real” transitions. Thus, it is a good idea to estimate an HMM. What happens if we try to estimate a two state HMM on the same, poorly discretized data?

⚠️ It is important to note that the HMM estimation is initialized from the PCCA++ metastable states that we already analyzed.

[14]:

hmm = pyemma.msm.estimate_hidden_markov_model(cluster_poor.dtrajs, nstates, msm.lag)

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-1-0094551212ec> in <module>

----> 1 hmm = pyemma.msm.estimate_hidden_markov_model(cluster_poor.dtrajs, nstates, msm.lag)

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/pyemma/msm/api.py in estimate_hidden_markov_model(dtrajs, nstates, lag, reversible, stationary, connectivity, mincount_connectivity, separate, observe_nonempty, stride, dt_traj, accuracy, maxit)

1210 accuracy=accuracy, maxit=maxit)

1211 # run estimation

-> 1212 return hmsm_estimator.estimate(dtrajs)

1213

1214

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/pyemma/_base/estimator.py in estimate(self, X, **params)

405 if params:

406 self.set_params(**params)

--> 407 self._model = self._estimate(X)

408 # ensure _estimate returned something

409 assert self._model is not None

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/pyemma/msm/estimators/maximum_likelihood_hmsm.py in _estimate(self, dtrajs)

264 self._observable_set = _np.arange(self._nstates_obs)

265 self._dtrajs_obs = dtrajs

--> 266 self.set_model_params(P=transition_matrix, pobs=observation_probabilities,

267 reversible=self.reversible, dt_model=self.timestep_traj.get_scaled(self.lag))

268

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/pyemma/msm/models/hmsm.py in set_model_params(self, P, pobs, pi, reversible, dt_model, neig)

94

95 """

---> 96 _MSM.set_model_params(self, P=P, pi=pi, reversible=reversible, dt_model=dt_model, neig=neig)

97 # set P and derived quantities if available

98 if pobs is not None:

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/pyemma/msm/models/msm.py in set_model_params(self, P, pi, reversible, dt_model, neig)

148 self.update_model_params(P=P)

149 # pi might be derived from P, if None was given.

--> 150 self.update_model_params(pi=pi, dt_model=dt_model, neig=neig)

151

152 def __eq__(self, other):

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/pyemma/_base/model.py in update_model_params(self, **params)

77 for key, value in params.items():

78 if not hasattr(self, key):

---> 79 setattr(self, key, value) # set parameter for the first time.

80 elif getattr(self, key) is None:

81 setattr(self, key, value) # update because this parameter is still None.

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/pyemma/msm/models/msm.py in pi(self, value)

284 if value is None and self.P is not None:

285 from msmtools.analysis import stationary_distribution as _statdist

--> 286 value = _statdist(self.P)

287 elif value is not None:

288 # check sum is one

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/msmtools/analysis/api.py in stationary_distribution(T)

342 # is the stationary distribution unique?

343 if not is_connected(T, directed=False):

--> 344 raise ValueError("Input matrix is not weakly connected. "

345 "Therefore it has no unique stationary "

346 "distribution. Separate disconnected components "

ValueError: Input matrix is not weakly connected. Therefore it has no unique stationary distribution. Separate disconnected components and handle them separately

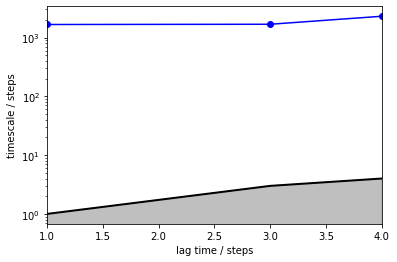

We are getting an error message which already explains what is going wrong, i.e., that the (macro-) states are not connected and thus no unique stationary distribution can be estimated. This is equivalent to having two eigenvalues of magnitude 1 or an implied timescale of infinity which is what we observe in the implied timescales plot.

[15]:

its = pyemma.msm.timescales_hmsm(cluster_poor.dtrajs, nstates, lags=[1, 3, 4, 10, 100])

pyemma.plots.plot_implied_timescales(its, marker='o', ylog=True);

09-12-20 14:49:22 pyemma.msm.estimators.maximum_likelihood_hmsm.MaximumLikelihoodHMSM[24] WARNING Ignored error during estimation: Input matrix is not weakly connected. Therefore it has no unique stationary distribution. Separate disconnected components and handle them separately

WARNING:pyemma.msm.estimators.maximum_likelihood_hmsm.MaximumLikelihoodHMSM[24]:Ignored error during estimation: Input matrix is not weakly connected. Therefore it has no unique stationary distribution. Separate disconnected components and handle them separately

09-12-20 14:49:22 pyemma.msm.estimators.maximum_likelihood_hmsm.MaximumLikelihoodHMSM[24] WARNING Ignored error during estimation: Input matrix is not weakly connected. Therefore it has no unique stationary distribution. Separate disconnected components and handle them separately

WARNING:pyemma.msm.estimators.maximum_likelihood_hmsm.MaximumLikelihoodHMSM[24]:Ignored error during estimation: Input matrix is not weakly connected. Therefore it has no unique stationary distribution. Separate disconnected components and handle them separately

09-12-20 14:49:22 pyemma.msm.estimators.implied_timescales.ImpliedTimescales[22] WARNING Estimation has failed at lagtimes: [ 10 100]. Details:

['Error at lag time 10: Input matrix is not weakly connected. Therefore it has '

'no unique stationary distribution. Separate disconnected components and '

'handle them separately',

'Error at lag time 100: Input matrix is not weakly connected. Therefore it '

'has no unique stationary distribution. Separate disconnected components and '

'handle them separately']

WARNING:pyemma.msm.estimators.implied_timescales.ImpliedTimescales[22]:Estimation has failed at lagtimes: [ 10 100]. Details:

['Error at lag time 10: Input matrix is not weakly connected. Therefore it has '

'no unique stationary distribution. Separate disconnected components and '

'handle them separately',

'Error at lag time 100: Input matrix is not weakly connected. Therefore it '

'has no unique stationary distribution. Separate disconnected components and '

'handle them separately']

09-12-20 14:49:22 pyemma.msm.estimators.implied_timescales.ImpliedTimescales[22] WARNING Some timescales could not be computed. Timescales array is smaller than expected or contains NaNs

WARNING:pyemma.msm.estimators.implied_timescales.ImpliedTimescales[22]:Some timescales could not be computed. Timescales array is smaller than expected or contains NaNs

[15]:

<AxesSubplot:xlabel='lag time / steps', ylabel='timescale / steps'>

As we see, the requested timescales above \(4\) steps could not be computed because the underlying HMM is disconnected, i.e., the corresponding timescales are infinity. The implied timescales that could be computed are most likely the same process that we observed from the fine clustering before, i.e., jumps within one basin.

In general, it is a non-trivial problem to show that processes were not sampled reversibly. In our experience, HMMs are a good choice here, even though situations can occur where they might not detect the problem as easily as in this example.

### poorly sampled double-well trajectories

Let’s now assume that everything worked out fine but our sampling is somewhat poor. This is a realistic scenario when dealing with large systems that were well-sampled but still contain only few events of interest. We expect that our trajectories are just long enough to sample a certain process but are too short to capture them with a large lag time. To rule out discretization issues and to make the example clear, we use the full data set for discretization.

[16]:

file = mdshare.fetch('hmm-doublewell-2d-100k.npz', working_directory='data')

with np.load(file) as fh:

data = [fh['trajectory'][:, 1]]

cluster = pyemma.coordinates.cluster_regspace(data, dmin=0.05)

We want to simulate a process that happens on a timescale that is on the order of magnitude of the trajectory length. To do so, we choose n_trajs chunks from the full data set that contain traj_length steps by splitting the original trajectory:

[17]:

traj_length = 10

n_trajs = 50

data_short_trajs = list(data[0].reshape((data[0].shape[0] // traj_length, traj_length)))[:n_trajs]

dtrajs_short = list(cluster.dtrajs[0].reshape((data[0].shape[0] // traj_length, traj_length)))[:n_trajs]

Now, let’s plot the trajectories (left panel) and estimate implied timescales (right panel) as above. Since we know the true ITS of this process, we visualize it as a dotted line.

[18]:

fig, axes = plt.subplots(1, 2, figsize=(10, 4))

for n, _traj in enumerate(data_short_trajs):

axes[0].plot(_traj, np.linspace(0, 1, _traj.shape[0]) + n)

lags = [i + 1 for i in range(9)]

its = pyemma.msm.its(dtrajs_short, lags=lags)

pyemma.plots.plot_implied_timescales(its, marker='o', ax=axes[1], nits=1)

its_reference = pyemma.msm.its(cluster.dtrajs, lags=lags)

pyemma.plots.plot_implied_timescales(its_reference, linestyle=':', ax=axes[1], nits=1)

fig.tight_layout()

09-12-20 14:49:25 pyemma.msm.estimators.implied_timescales.ImpliedTimescales[26] WARNING Some timescales could not be computed. Timescales array is smaller than expected or contains NaNs

WARNING:pyemma.msm.estimators.implied_timescales.ImpliedTimescales[26]:Some timescales could not be computed. Timescales array is smaller than expected or contains NaNs

We note that the slowest process is clearly contained in the data chunks and is reversibly sampled (left panel, short trajectory pieces color coded and stacked). Due to very short trajectories, we find that this process can only be captured at a very short MSM lag time (right panel). Above that interval, the slowest timescale diverges. Luckily, here we know that it is already converged at \(\tau = 1\), so we estimate an MSM:

[19]:

msm_short_trajectories = pyemma.msm.estimate_markov_model(dtrajs_short, 1)

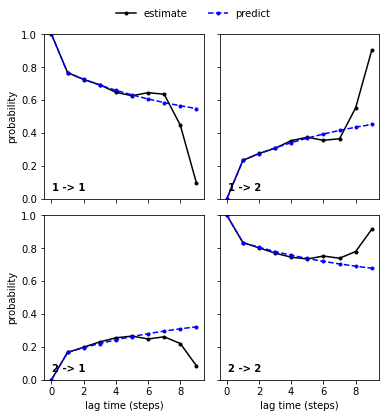

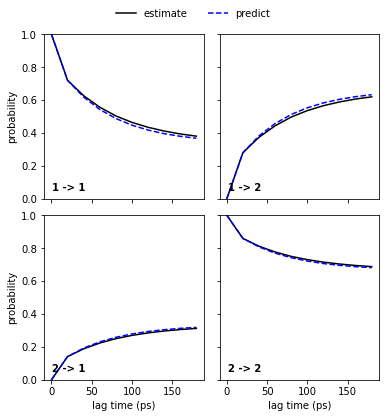

Let’s now have a look at the CK-test:

[20]:

pyemma.plots.plot_cktest(msm_short_trajectories.cktest(2), marker='.');

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/msmtools/analysis/api.py:367: UserWarning: truncated eigendecomposition to contain 43 components

warnings.warn('truncated eigendecomposition to contain %s components' % new_k, category=UserWarning)

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/msmtools/analysis/api.py:367: UserWarning: truncated eigendecomposition to contain 42 components

warnings.warn('truncated eigendecomposition to contain %s components' % new_k, category=UserWarning)

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/msmtools/analysis/api.py:367: UserWarning: truncated eigendecomposition to contain 38 components

warnings.warn('truncated eigendecomposition to contain %s components' % new_k, category=UserWarning)

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/msmtools/analysis/api.py:367: UserWarning: truncated eigendecomposition to contain 35 components

warnings.warn('truncated eigendecomposition to contain %s components' % new_k, category=UserWarning)

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/msmtools/analysis/api.py:367: UserWarning: truncated eigendecomposition to contain 32 components

warnings.warn('truncated eigendecomposition to contain %s components' % new_k, category=UserWarning)

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/msmtools/analysis/api.py:367: UserWarning: truncated eigendecomposition to contain 20 components

warnings.warn('truncated eigendecomposition to contain %s components' % new_k, category=UserWarning)

/storage/mi/marscher/software/miniconda3/conda-bld/pyemma-doc_1607519340854/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_pl/lib/python3.9/site-packages/msmtools/analysis/api.py:367: UserWarning: truncated eigendecomposition to contain 14 components

warnings.warn('truncated eigendecomposition to contain %s components' % new_k, category=UserWarning)

[20]:

(<Figure size 432x432 with 4 Axes>,

array([[<AxesSubplot:ylabel='probability'>, <AxesSubplot:>],

[<AxesSubplot:xlabel='lag time (steps)', ylabel='probability'>,

<AxesSubplot:xlabel='lag time (steps)'>]], dtype=object))

As already discussed, we cannot expect new estimates above a certain lag time to agree with the model prediction due to too short trajectories. Indeed, we find that new estimates and model predictions diverge at very high lag times. This does not necessarily mean that the model at \(\tau=1\) is wrong and in this particular case, we can even explain the divergence and find that it fits to the implied timescales divergence.

This example mirrors another incarnation of the sampling problem: Working with large systems, we often have comparably short trajectories with few rare events. Thus, implied timescales convergence can often be achieved only in a certain interval and CK-tests will not converge up to arbitrary multiples of the lag time. It is the responsibility of the modeler to interpret these results and to ensure that a valid model can be obtained from the data.

Please note that this is only a special case of a failed CK test. More general information about CK tests and what it means if it fails are explained in Notebook 03 ➜ 📓.

Case 2: low-dimensional molecular dynamics data (alanine dipeptide)¶

In this example, we will show how an ill-conducted TICA analysis can yield results that look metastable in the 2D histogram, but in fact are not describing the slow dynamics. Please note that this was deliberately broken with a nonsensical TICA-lagtime of almost trajectory length, which is 250 ns.

We start off with adding all atom coordinates. That is a non-optimal choice because it artificially blows up the dimensionality, but might still be a reasonable choice depending on the problem. A well-conducted TICA projection can extract the slow coordinates, as we will see at the end of this example.

[21]:

pdb = mdshare.fetch('alanine-dipeptide-nowater.pdb', working_directory='data')

files = mdshare.fetch('alanine-dipeptide-*-250ns-nowater.xtc', working_directory='data')

feat = pyemma.coordinates.featurizer(pdb)

feat.add_all()

data = pyemma.coordinates.load(files, features=feat)

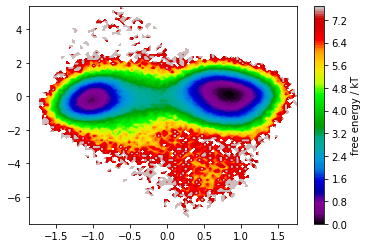

TICA analysis is conducted with an extremely high lag time of almost \(249.9\) ns. We map down to two dimensions.

[22]:

tica = pyemma.coordinates.tica(data, lag=data[0].shape[0] - 100, dim=2)

tica_output = tica.get_output()

pyemma.plots.plot_free_energy(*np.concatenate(tica_output).T, legacy=False);

[22]:

(<Figure size 432x288 with 2 Axes>,

<AxesSubplot:>,

{'mappable': <matplotlib.contour.QuadContourSet at 0x7f5a86232ca0>,

'cbar': <matplotlib.colorbar.Colorbar at 0x7f5a86b2ffd0>})

In the free energy plot, we recognize two defined basins that are nicely separated by the first TICA component. We thus continue with a discretization of this space and estimate MSM implied timescales.

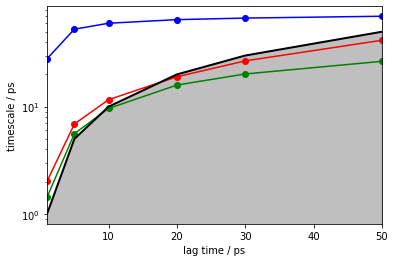

[23]:

cluster = pyemma.coordinates.cluster_kmeans(tica_output, k=200, max_iter=30, stride=100)

[24]:

its = pyemma.msm.its(cluster.dtrajs, lags=[1, 5, 10, 20, 30, 50])

pyemma.plots.plot_implied_timescales(its, marker='o', units='ps', nits=3);

[24]:

<AxesSubplot:xlabel='lag time / ps', ylabel='timescale / ps'>

Indeed, we observe a converged implied timescale. In this example we already know that it is way lower than expected, but in the general case we are unaware of the real dynamics of the system.

Thus, we estimate an MSM at lag time $20 $ ps. Coarse graining and validation will be done with \(2\) metastable states since we found \(2\) basins in the free energy landscape and have one slow process in the ITS plot.

[25]:

msm = pyemma.msm.estimate_markov_model(cluster.dtrajs, 20)

nstates = 2

msm.pcca(nstates);

[25]:

PCCA(P=array([[0.01891, 0.00286, ..., 0.00111, 0. ],

[0.00227, 0.02014, ..., 0. , 0.00019],

...,

[0.02518, 0. , ..., 0. , 0. ],

[0. , 0.0057 , ..., 0. , 0. ]]),

m=2)

[26]:

stride = 10

metastable_trajs_strided = [msm.metastable_assignments[dtrj[::stride]] for dtrj in cluster.dtrajs]

tica_output_strided = [i[::stride] for i in tica_output]

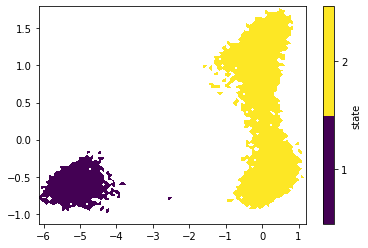

_, _, misc = pyemma.plots.plot_state_map(*np.concatenate(tica_output_strided).T,

np.concatenate(metastable_trajs_strided));

misc['cbar'].set_ticklabels(range(1, nstates + 1)) # set state numbers 1 ... nstates

As we see, the PCCA++ algorithm is perfectly able to separate the two basins. Let’s go on with a Chapman-Kolmogorow validation.

[27]:

pyemma.plots.plot_cktest(msm.cktest(nstates), units='ps');

[27]:

(<Figure size 432x432 with 4 Axes>,

array([[<AxesSubplot:ylabel='probability'>, <AxesSubplot:>],

[<AxesSubplot:xlabel='lag time (ps)', ylabel='probability'>,

<AxesSubplot:xlabel='lag time (ps)'>]], dtype=object))

Congratulations, we have estimated a well-validated MSM. The only question remaining is: What does it actually describe? For this, we usually extract representative structures as described in Notebook 00 ➜ 📓. We will not do this here but look at the metastable trajectories instead.

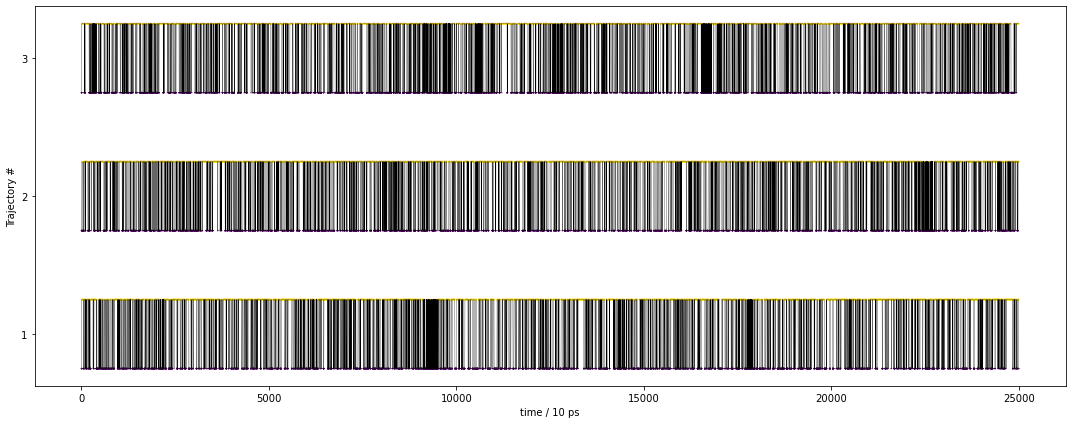

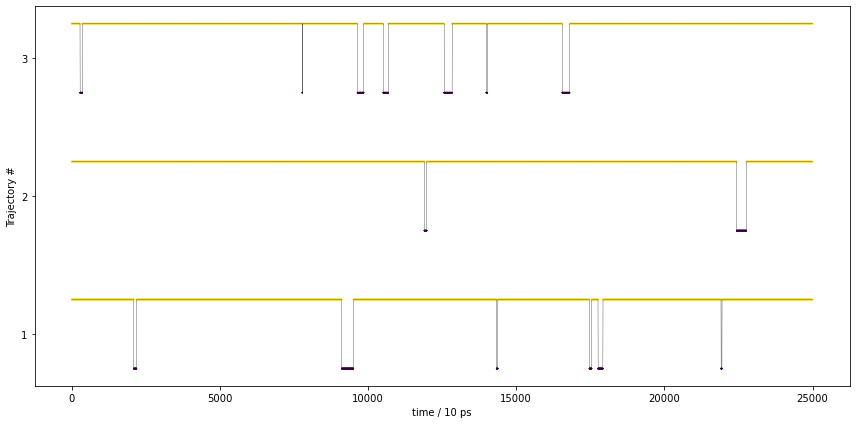

Let’s have a look at the trajectories as assigned to PCCA++ metastable states. We have already computed them before but not looked at their time dependence.

[28]:

fig, ax = plt.subplots(1, 1, figsize=(15, 6), sharey=True, sharex=True)

ax_yticks_labels = []

for n, pcca_traj in enumerate(metastable_trajs_strided):

ax.plot(range(len(pcca_traj)), msm.n_metastable * n + pcca_traj, color='k', linewidth=0.3)

ax.scatter(range(len(pcca_traj)), msm.n_metastable * n + pcca_traj, c=pcca_traj, s=0.1)

ax_yticks_labels.append(((msm.n_metastable * (2 * n + 1) - 1) / 2, n + 1))

ax.set_yticks([l[0] for l in ax_yticks_labels])

ax.set_yticklabels([str(l[1]) for l in ax_yticks_labels])

ax.set_ylabel('Trajectory #')

ax.set_xlabel('time / {} ps'.format(stride))

fig.tight_layout()

The above figure shows the metastable states visited by the trajectory over time. Each metastable state is color-coded, the trajectory is shown by the black line. This is clearly not a metastable trajectory as we would have expected.

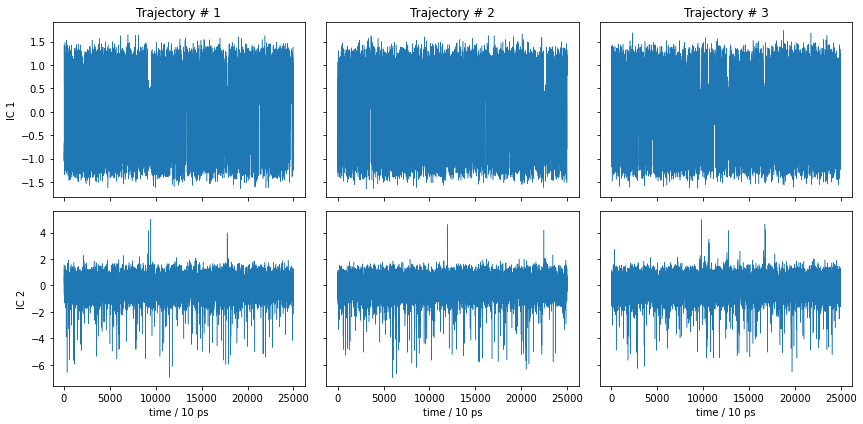

What did we do wrong? Let’s have a look at the TICA trajectories, not only the histogram!

[29]:

fig, axes = plt.subplots(2, 3, figsize=(12, 6), sharex=True, sharey='row')

for n, trj in enumerate(tica_output):

for dim, traj1d in enumerate(trj.T):

axes[dim, n].plot(traj1d[::stride], linewidth=.5)

for ax in axes[1]:

ax.set_xlabel('time / {} ps'.format(stride))

for dim, ax in enumerate(axes[:, 0]):

ax.set_ylabel('IC {}'.format(dim + 1))

for n, ax in enumerate(axes[0]):

ax.set_title('Trajectory # {}'.format(n + 1))

fig.tight_layout()

This is essentially noise, so it is not surprising that the metastable trajectories do not show significant metastability. The MSM nevertheless found a process in the above TICA components which, however, does not seem to describe any of the slow dynamics. Thus, the model is not wrong, it is just not informative.

As we see in this example, it can be instructive to keep the trajectories in mind and not to rely on the histograms alone.

⚠️ Histograms are no proof of metastability, they can only give us a hint towards defined states in a multi-dimensional state space which can be metastable.

In this particular example, we already know the issue: the TICA lag time was deliberately chosen way too high. That’s easy to fix.

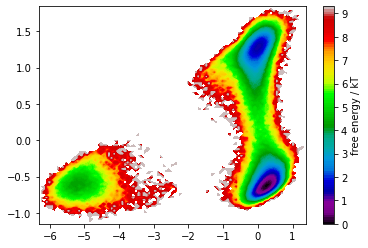

Let’s now have a look at how the metastable trajectories should look for a decent model such as the one estimated in Notebook 05 ➜ 📓. We will take the same input data, do a TICA transform with a realistic lag time of \(10\) ps, and coarse grain into \(2\) metastable states in order to compare with the example above.

[30]:

tica = pyemma.coordinates.tica(data, lag=10, dim=2)

tica_output = tica.get_output()

cluster = pyemma.coordinates.cluster_kmeans(tica_output, k=200, max_iter=30, stride=100)

pyemma.plots.plot_free_energy(*np.concatenate(tica_output).T, legacy=False);

[30]:

(<Figure size 432x288 with 2 Axes>,

<AxesSubplot:>,

{'mappable': <matplotlib.contour.QuadContourSet at 0x7f5acc8775e0>,

'cbar': <matplotlib.colorbar.Colorbar at 0x7f59e80f5fd0>})

As wee see, TICA yields a very nice state separation. We will see that these states are in fact metastable.

[31]:

msm = pyemma.msm.estimate_markov_model(cluster.dtrajs, lag=20)

msm.pcca(nstates);

[31]:

PCCA(P=array([[0.01881, 0.00247, ..., 0.00088, 0.01056],

[0.00321, 0.02554, ..., 0.00521, 0.00143],

...,

[0.00427, 0.01946, ..., 0.00533, 0.00293],

[0.0181 , 0.00188, ..., 0.00104, 0.01056]]),

m=2)

[32]:

metastable_trajs_strided = [msm.metastable_assignments[dtrj[::stride]] for dtrj in cluster.dtrajs]

[33]:

stride = 10

tica_output_strided = [i[::stride] for i in tica_output]

_, _, misc = pyemma.plots.plot_state_map(*np.concatenate(tica_output_strided).T,

np.concatenate(metastable_trajs_strided));

misc['cbar'].set_ticklabels(range(1, nstates + 1)) # set state numbers 1 ... nstates

We note that PCCA++ separates the two basins of the free energy plot. Let’s have a look at the metastable trajectories:

[34]:

fig, ax = plt.subplots(1, 1, figsize=(12, 6), sharey=True, sharex=True)

ax_yticks_labels = []

for n, pcca_traj in enumerate(metastable_trajs_strided):

ax.plot(range(len(pcca_traj)), msm.n_metastable * n + pcca_traj, color='k', linewidth=0.3)

ax.scatter(range(len(pcca_traj)), msm.n_metastable * n + pcca_traj, c=pcca_traj, s=0.1)

ax_yticks_labels.append(((msm.n_metastable * (2 * n + 1) - 1) / 2, n + 1))

ax.set_yticks([l[0] for l in ax_yticks_labels])

ax.set_yticklabels([str(l[1]) for l in ax_yticks_labels])

ax.set_ylabel('Trajectory #')

ax.set_xlabel('time / {} ps'.format(stride))

fig.tight_layout()

These trajectories show the expected behavior of a metastable trajectory, i.e., it does not quickly jump back and forth between the states.

Wrapping up¶

In this notebook, we have learned about some problems that can arise when estimating MSMs with “real world” data at simple examples. In detail, we have seen - irreversibly connected dynamics and what it means for MSM estimation, - fully disconnected trajectories and how to identify them, - connected but poorly sampled trajectories and how convergence looks in this case, - ill-conducted TICA analysis and what it yields.

The most important lesson from this tutorial is that histograms, which are usually calculated in a projected space, are not a sufficient means of identifying metastability or connectedness. It is crucial to remember that the underlying trajectories play the role of ground truth for the model. Ultimately, histograms only help us to understand this ground truth but cannot provide a complete picture.